Artificial intelligence promises to revolutionize how UX designers work, yet most of us stare at ChatGPT’s bland responses wondering why the “revolutionary” tool feels more like a mediocre intern than a creative partner.

The gap between AI’s potential and its actual usefulness isn’t a technology problem. It’s a communication problem. Just as users need clear navigation and thoughtful guidance to accomplish their goals, AI tools need structured, detailed instructions to deliver meaningful results. The difference between getting generic fluff and genuinely helpful output often comes down to how carefully you craft your prompts.

Why Most Designers Struggle With AI Tools

When we approach AI chatbots like ChatGPT, Claude, or Gemini, we often treat them like experienced colleagues who understand our context. We type something like “write an error message for my login screen” and expect intelligent, nuanced results. Instead, we get something generic that sounds like it was pulled from a 2010 design blog.

The problem isn’t the AI’s capability. These tools can generate remarkably sophisticated outputs when given proper direction. The issue is that without context, constraints, and clear objectives, AI falls back on the most common patterns it learned during training. For UX work, where specificity and user-centricity matter enormously, this generic approach fails.

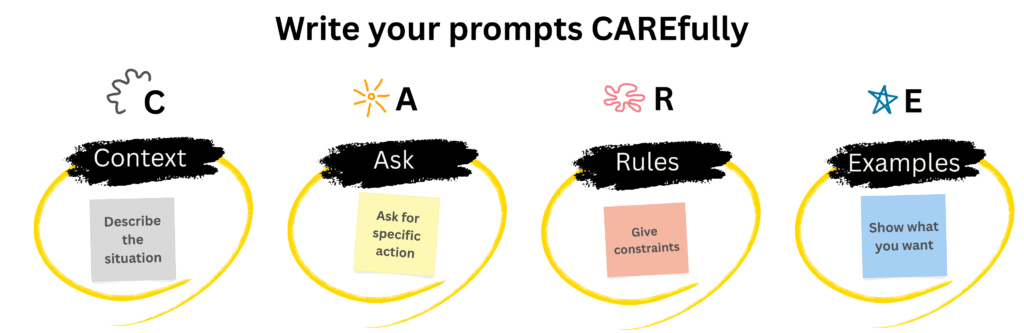

This is where the CARE framework comes in. Think of it as a mental checklist for structuring your AI conversations, ensuring you provide everything the system needs to generate genuinely useful results.

Understanding the CARE Framework

CARE stands for Context, Ask, Rules, and Examples. These four components form the foundation of effective AI prompting, particularly for complex creative work like UX design. While the acronym suggests a specific order, you can arrange these elements however makes sense for your particular task. The key is ensuring you include the relevant pieces.

Not every interaction with AI requires this level of detail. Simple information-seeking questions work fine with casual prompts. But when you need specific, polished outputs that align with your project requirements and brand guidelines, investing time in a well-structured prompt pays significant dividends.

CARE: Context, Ask, Rules, Examples

Context: Setting the Stage for Success

Context establishes the framing for your entire prompt. Think about explaining your situation to a new team member or consultant. What background would they need to provide informed, relevant advice?

For UX work, valuable context often includes your role and experience level, the product you’re designing, the type of company you work for, and the specific project at hand. You might describe user research findings, share details about your target audience, or explain the particular screen or feature you’re currently designing.

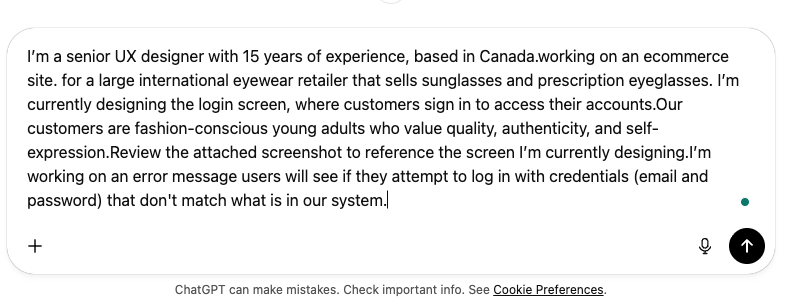

Imagine you’re working on an error message for an ecommerce site’s login screen. Your context might explain that you’re a senior UX designer working for an international eyewear retailer selling sunglasses and prescription glasses. You could note that your customers are fashion-conscious young adults who value quality and self-expression. This background helps the AI understand not just what you’re building, but who you’re building it for and why certain approaches might work better than others.

Some AI tools offer features that reduce repetitive context-setting. ChatGPT’s custom instructions feature lets you provide persistent background information that the system remembers across conversations. Their memory feature goes further, learning from your previous interactions to build an ongoing understanding of your work and preferences. These capabilities can significantly streamline your workflow once you’ve established your baseline context.

Ask: Making Clear, Specific Requests

Your “ask” is the actual task you want the AI to perform. Specificity matters enormously here. Rather than requesting “help with my error message,” you might ask the AI to generate fifteen different options for a login error message, evaluate them against specific criteria, rank the top five, and present them in a structured format with reasoning.

Strong asks often include several elements. You might define a role for the AI, such as acting as a UX writer focused on plain language communication. You specify the output format you need, whether that’s a table, a list, a paragraph of prose, or something else entirely. You indicate how many variations or options you want. For complex tasks, you can break down the process into discrete steps.

This step-by-step approach, known as chain-of-thought prompting, helps AI models approach problems more systematically. Rather than generating a single response in one pass, you’re guiding the system through a logical progression. First generate options, then evaluate them, then rank them, then present the best results with explanations. This structured approach consistently produces better outcomes than asking for everything at once.

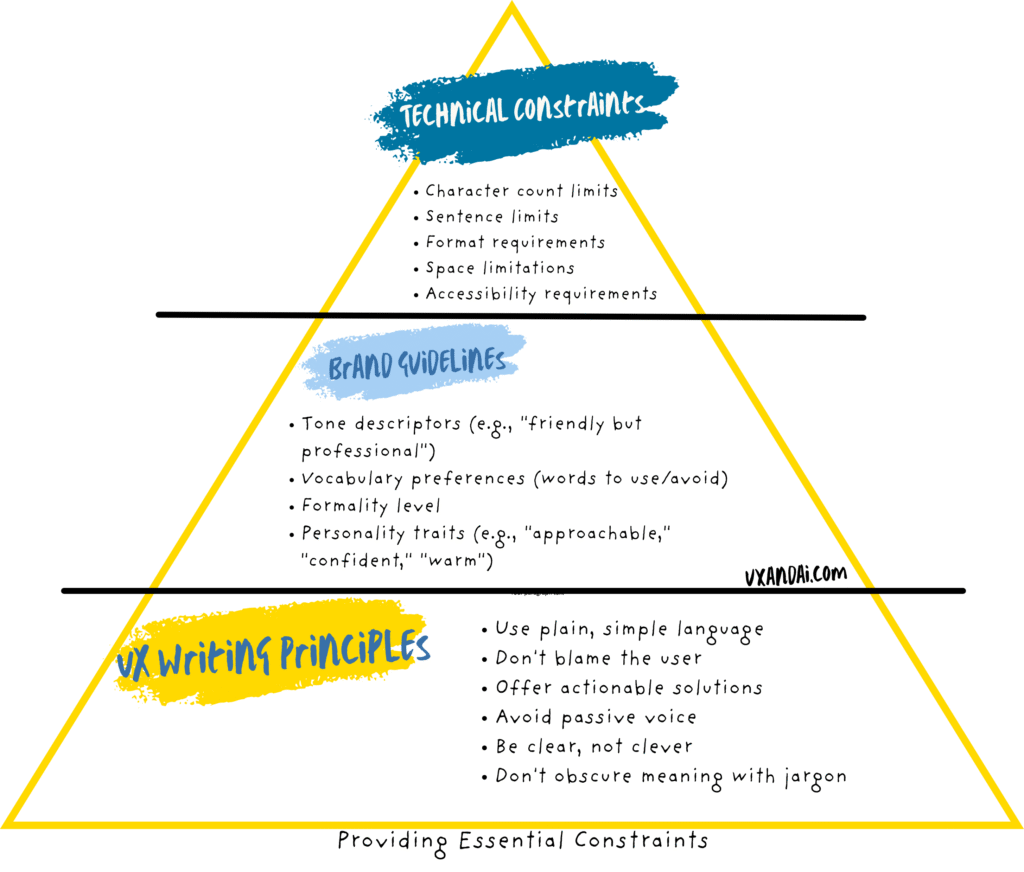

Rules: Providing Essential Constraints

Rules are the guardrails that shape AI’s output. Without constraints, generative AI tends to produce middling results that play it safe and aim for broad appeal. Rules help you get specific, appropriate results that actually fit your needs.

For UX work, rules often draw from established best practices. If you’re writing microcopy, you might specify using plain language, avoiding humor in frustrating situations, never blaming users, and offering constructive solutions rather than just identifying problems. These are principles any experienced UX writer knows, but the AI needs you to state them explicitly.

You should also include project-specific constraints. Your brand’s tone of voice guidelines matter here. If your company has a friendly, conversational voice, specify that, but also note where that friendliness should pull back. Technical constraints count too. If your error message needs to fit in a small modal with a character limit, tell the AI exactly how many characters it has to work with.

Product-specific rules help the AI understand your unique context. Maybe your users expect a certain level of formality, or perhaps they respond poorly to corporate language. Maybe you’re designing for accessibility and need to ensure screen reader compatibility. Whatever constraints shape your design decisions should also shape your AI prompts.

Examples: Showing What Good Looks Like

Examples bridge the gap between abstract instructions and concrete expectations. Whether you’re showing good examples to emulate or bad examples to avoid, providing reference points helps AI understand exactly what you want.

If you’re trying to improve existing copy, include it in your prompt and explain what needs improvement. Perhaps a developer suggested “Invalid credentials; please check your email and password,” but it feels too technical and formal for your brand. Sharing this context helps the AI understand not just what you don’t want, but why.

Good examples demonstrate success. You might share a different error message from your product that works well, explaining what makes it effective. Maybe “Add twelve dollars more for free shipping” succeeds because it provides a specific, actionable next step. This kind of concrete reference point is far more valuable than abstract descriptions of good writing.

The concept relates to a prompting technique called few-shot prompting, where you provide input-output pairs showing exactly what you want. For some tasks, like generating article titles, you might literally show the AI several article summaries paired with their actual titles. This direct modeling can be extremely powerful. For other tasks, like our error message example, simply showing output examples of what you want or don’t want suffices.

Putting It All Together: A Complete Example

Let’s see how these four components combine into a complete prompt. Imagine we’re creating error messages for that eyewear retailer’s login screen.

We start with background context, explaining our role as a senior UX designer with fifteen years of experience working on an ecommerce site for an international eyewear retailer. We describe the project, noting we’re designing the login screen where customers access their account details, specifically focusing on the error message shown when login credentials don’t match system records.

Next, we define the AI’s role, asking it to act as a UX writer focused on intuitive, straightforward, plain language. We provide clear steps: generate fifteen options for login error messages, review those options against our criteria, select the five best, rank them from best to worst with reasoning, and present the final five in a table with messages in the first column and reasoning in the second.

Our rules section establishes guidelines. Use plain language. Don’t try to be funny or clever. Don’t obscure the message’s meaning. Offer solutions and recommendations. Don’t blame users. Avoid passive voice. The tone should be approachable and conversational without becoming too casual or sloppy. Keep it under one hundred characters and no more than two sentences.

Finally, we provide examples. We note that “Add twelve dollars more for free shipping” works well because it gives specific, actionable guidance. We explain that “Oopsie, we couldn’t log you in” fails because it’s too casual in a frustrating situation. We share that a developer suggested “Invalid credentials; please check your email and password,” but it sounds too technical and formal.

When this comprehensive prompt runs through a modern AI model, the results are remarkably good. The top suggestion might read: “Please check your email and password and try again.” Simple, direct, and provides a clear next step. Another strong option could be: “The email or password doesn’t match our records. Try again.” It explains the problem clearly while providing straightforward instructions for resolution.

The Refinement Process: Iteration Matters

Even with a comprehensive, carefully structured prompt, you’ll rarely accept AI output exactly as generated. This is particularly true for writing, where tone and nuance matter enormously. Expect to iterate.

Start by checking whether the AI followed all your instructions. The more detailed your prompt, the more likely the system overlooked or forgot something. If important requirements were missed, ask it to try again while specifically addressing the overlooked details.

You’ll often discover missing instructions only after seeing initial results. Perhaps you forgot to specify that error messages should never use technical jargon, or that they need to work for international audiences. Add these new constraints and run the prompt again.

Don’t hesitate to ask for more options, especially during ideation phases. If your initial prompt asked for five error message variations, request five more. Different iterations often surface ideas you hadn’t considered.

The most powerful approach combines multiple AI-generated options with your own UX expertise. Take the language you like from one option, the structure from another, and your knowledge of best practices to create something better than any single output. For instance, if the AI’s error messages lack constructive next steps, you might add a hyperlink allowing users to reset their password or contact support.

The Reality Check: When CARE Isn’t Worth the Effort

This level of detailed prompting requires significant time and thought. Current AI tools and models often need this much strategic context to produce good results, but that doesn’t mean the investment always pays off.

For some people with certain tasks, crafting and iterating on complex prompts takes more time than simply doing the work directly. An experienced UX writer might write a dozen error message variations faster than they could construct a comprehensive prompt and refine the results. Someone less experienced with writing might find the AI approach significantly faster. Your mileage will vary based on your skills and the specific task.

AI tools handle some types of work much better than others. Writing a careful prompt won’t help if you’re asking the system to perform tasks it fundamentally can’t do well. You wouldn’t use ChatGPT to do your taxes, regardless of prompt quality. Smaller, more focused tasks generally yield better results than large, complex ones.

The key is experimentation and practice. The more you work with AI tools on different types of UX tasks, the better you’ll understand where they help and where they hinder. You’ll develop intuition about which situations benefit from detailed prompts and which situations call for just doing the work yourself. You’ll learn to recognize where AI tends to fail or get confused, helping you avoid wasting time on approaches unlikely to succeed.

Making CARE Part of Your Design Process

The CARE framework isn’t about making AI do your job. It’s about making AI a more effective tool when you choose to use it. Like any UX methodology, it provides structure to help you think through problems systematically and communicate requirements clearly.

Start experimenting with the framework on low-stakes tasks. Try it for brainstorming session titles, generating research discussion guide questions, or drafting email templates. Pay attention to which components matter most for different types of work. Notice when AI helps and when it doesn’t.

As you build experience, you’ll develop your own prompting patterns and preferences. You might find certain phrasings consistently produce better results. You might discover that for your work, context and examples matter more than elaborate rules. The framework is a starting point, not a rigid formula.

The future of UX design will likely involve increasing AI collaboration. Designers who learn to communicate effectively with these tools while maintaining critical judgment about their outputs will have significant advantages. The CARE framework is one step toward that future, helping us bridge the gap between AI’s capabilities and our needs as designers.

The revolution isn’t about AI replacing designers. It’s about designers who understand AI replacing designers who don’t.

Ready to improve your AI prompting skills? Start by taking one task you’d normally do manually and trying the CARE framework. Share your results and what you learned with your design team.